Understanding LSTM: A Deep Dive into Long Short-Term Memory Networks

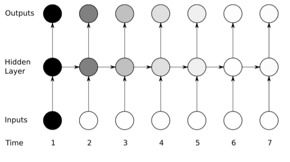

Long Short-Term Memory (LSTM) networks have revolutionized the field of deep learning, particularly in tasks that involve sequential data. Unlike traditional Recurrent Neural Networks (RNNs), LSTMs are designed to overcome the limitations of vanishing and exploding gradients, enabling them to capture long-term dependencies in data. In this article, we will explore the intricacies of LSTMs, their components, advantages, and applications.

Core Components of LSTM

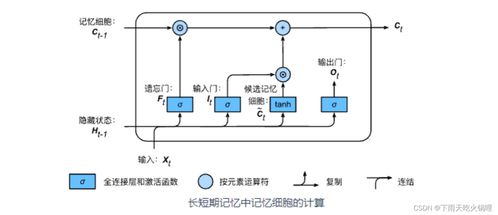

The core of LSTM lies in its cell state and three gate mechanisms: the forget gate, the input gate, and the output gate.

| Gate | Description |

|---|---|

| Forget Gate | Determines which information from the previous cell state should be discarded. |

| Input Gate | Decides which new information should be stored in the cell state. |

| Output Gate | Controls the information that should be output from the cell state. |

The cell state acts as a “memory” line that passes through the entire chain, with only a few linear operations applied to it. This allows information to flow easily without changing. The forget gate, input gate, and output gate work together to update the cell state and hidden state at each time step.

Advantages of LSTM

LSTMs offer several advantages over traditional RNNs:

-

Solve the problem of vanishing and exploding gradients: LSTMs effectively address the issue of vanishing and exploding gradients, allowing the model to capture long-term dependencies in data.

-

Strong sequence modeling capability: LSTMs have a strong ability to model sequences, capturing temporal dependencies and contextual information in data.

-

Flexibility: The model structure of LSTMs is flexible, allowing for adjustments in model parameters and layers to adapt to different application scenarios.

Applications of LSTM

LSTMs have found wide applications in various fields, including:

-

Speech recognition: LSTMs have achieved significant results in speech recognition, accurately identifying speech units and vocabulary in speech signals.

-

Natural language processing: LSTMs are widely used in natural language processing tasks, such as text classification, sentiment analysis, and machine translation, capturing contextual information and semantic relationships in sentences.

-

Time series prediction: LSTMs have demonstrated remarkable advantages in time series prediction, accurately predicting future trends and patterns in time series data, such as stock price prediction and weather forecasting.

Future Trends of LSTM

As deep learning technology continues to evolve, LSTMs are also being integrated with other models to further improve performance. Some of the future trends include:

-

Combining with other models: Integrating LSTMs with other models, such as Transformers, can build more efficient and accurate models for handling more complex tasks.

-

Optimizing algorithms and hardware acceleration: Researchers are continuously exploring and optimizing LSTM training algorithms to improve training speed and performance. High-performance computing devices, such as GPUs, also provide strong support for LSTM training and inference.

In conclusion, LSTMs have become an essential tool in the field of deep learning, enabling the effective processing of sequential data. With their unique advantages and wide range of applications, LSTMs will continue to play a crucial role in the development of AI technology.